For open science to advance, it is essential to monitor its practices to meaningfully assess whether they are achieving their intended goals for research and society. The Open Science Monitoring Initiative (OSMI) was established to help the community assess the adoption and impact of open science across the research ecosystem and beyond. One of its areas of focus is to understand how content providers are monitoring both open research outputs and the outcomes enabled by open outputs and practices. A recent survey of publishers, repositories, and content platforms shows genuine momentum in adoption of open science monitoring, alongside a diversity of areas of interest and uneven maturity. The survey results reveal that existing approaches are often driven by policy compliance and reporting obligations, with a conspicuous gap between indicators for open objects and evidence for the downstream impact of openness. The findings reveal opportunities to strengthen practices among scholarly content providers through greater methodological alignment and better support for newcomers adopting monitoring practices.

Why OSMI ran this survey

Focused on scholarly content providers, OSMI’s Working Group 3 designed a survey to identify current monitoring practices among journals, repositories and other providers, including motivations for monitoring; implementation choices and challenges; and the enablers that matter most to those who do not yet complete monitoring. Throughout the survey, the group applied the following definitions: open outputs meant open research objects such as open-access articles, datasets, software or others; open outcomes referred to downstream activities or effects associated with openness, including reuse, collaboration, and societal engagement. The survey was circulated among OSMI members, and while it cannot be seen as representative of the scholarly communications sector as a whole, it provides valuable insights on current practices and areas of need to advance monitoring activities across these key constituents.

Who responded, and motivations (and risks) to watch

The survey received 51 complete responses. The highest representation came from publishers and journals (39%), followed by librarians (19%) and repositories or archives (16%). This mix reflects the diversity of providers that develop, manage, and operate infrastructure and platforms to enable the sharing, aggregation, preservation, and evaluation of scholarly content. Figure 1 summarizes a central finding: 70.1% of respondents report completing open science monitoring activities, 23.4% do not, and 6.5% are unsure.

Fig. 1: Respondent composition by open science monitoring status (Yes / No / Do not know)

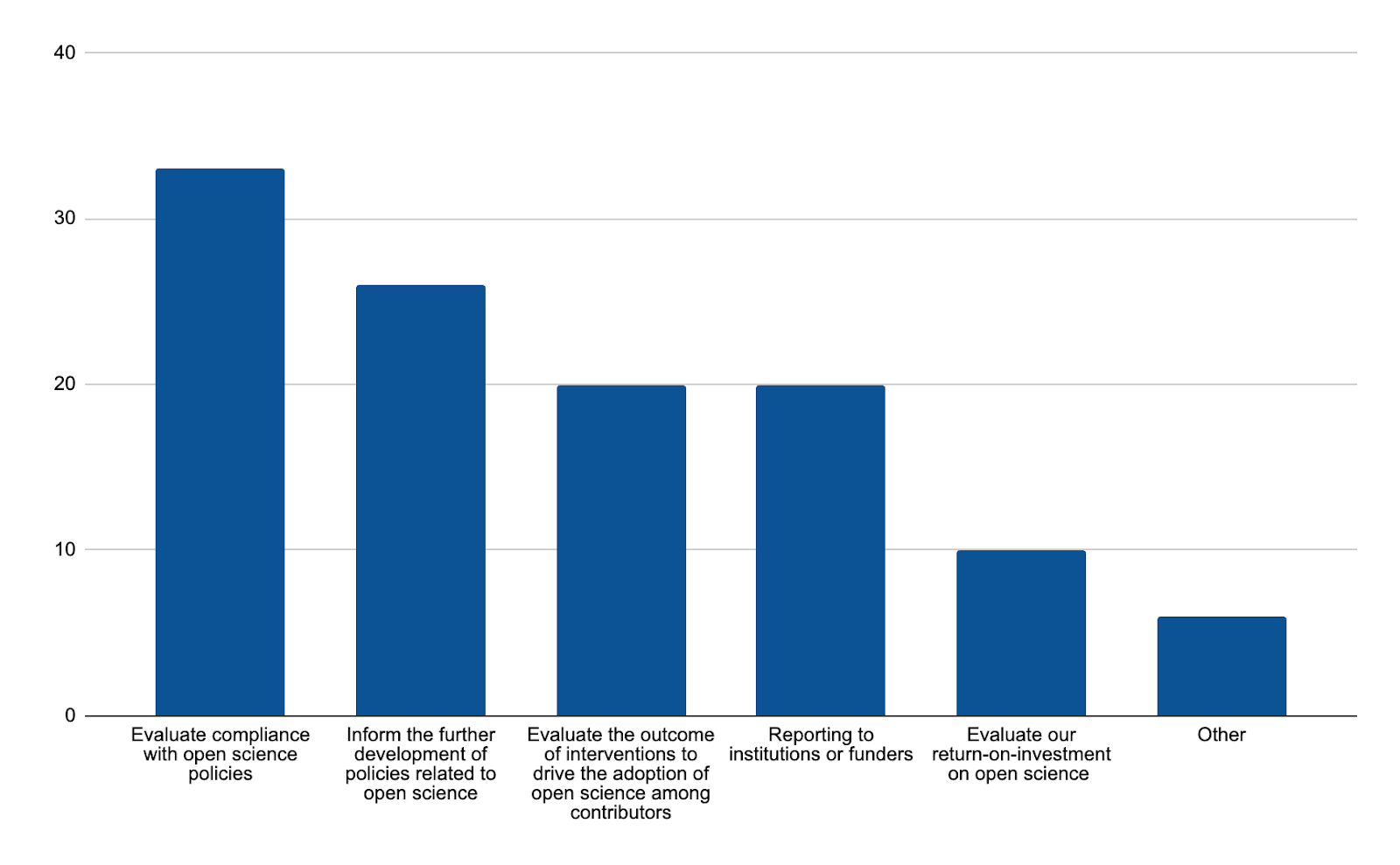

Motivations for monitoring open science are pragmatic (Figure 2). Respondents point first to compliance and alignment with open-science policies; closely behind come the use of evidence to inform future policy development and the need to report to institutions and funders. Transparency to stakeholders and a desire for data-driven assessment and benchmarking reinforce growing interest in comparable reliable indicators. Yet, one data point stands out: only ten responses referenced return-on-investment (ROI) tracking.

Fig. 2: Reasons that motivated the implementation of open science monitoring

The responses may reflect the fact that there has been substantial work over the last years to create open science policies (for example for open access or open data), as well as approaches to evaluate compliance with such policies, while common frameworks for the factors that underline an economic return for our investments (ROI) in open science still require development. This creates gaps in our understanding of downstream reuse and in the evidence available to support the economic case for the budgets and resourcing necessary to advance openness. Possible initial steps to remedy these gaps involve the implementation of proxies that can build evidence toward reuse and ROI narratives, such as measures of reuse, deposit latency, and license clarity for all outputs.

What is being monitored: policies, outputs, and outcomes

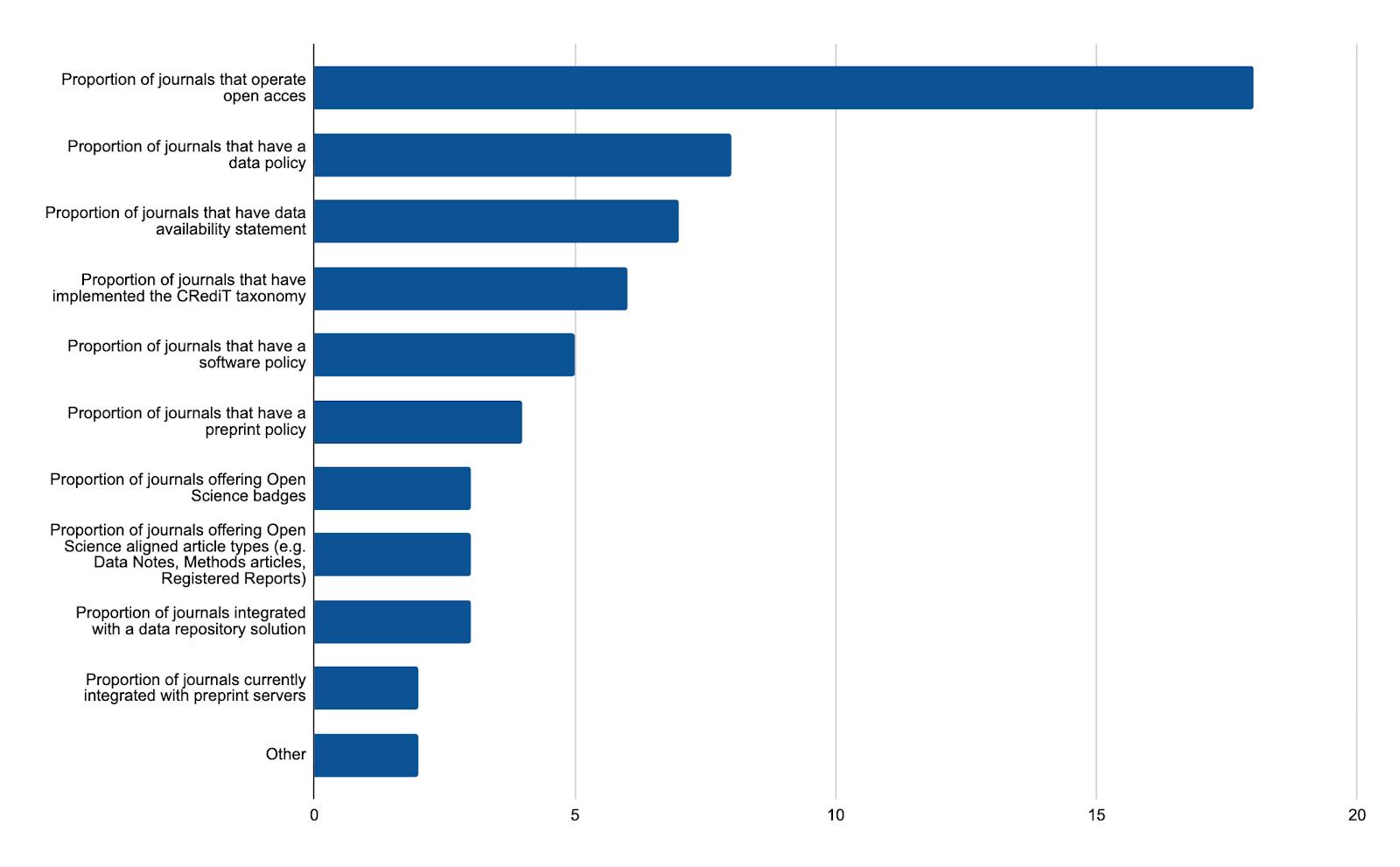

What is being monitored today follows the gradient of policies and processes in place at journals, repositories, and platforms. Policy-level indicators among publishers are a mature practice. Publishers most commonly track the proportion of journals in their portfolio which operate open access, the share of journals with a data policy, and the prevalence of data availability statements; far fewer track integrations with preprint servers or data repositories, steps that require interoperability with external partners (Figure 3).

Fig. 3: Indicators related to open science policies that publishers currently collect as part of their open science monitoring

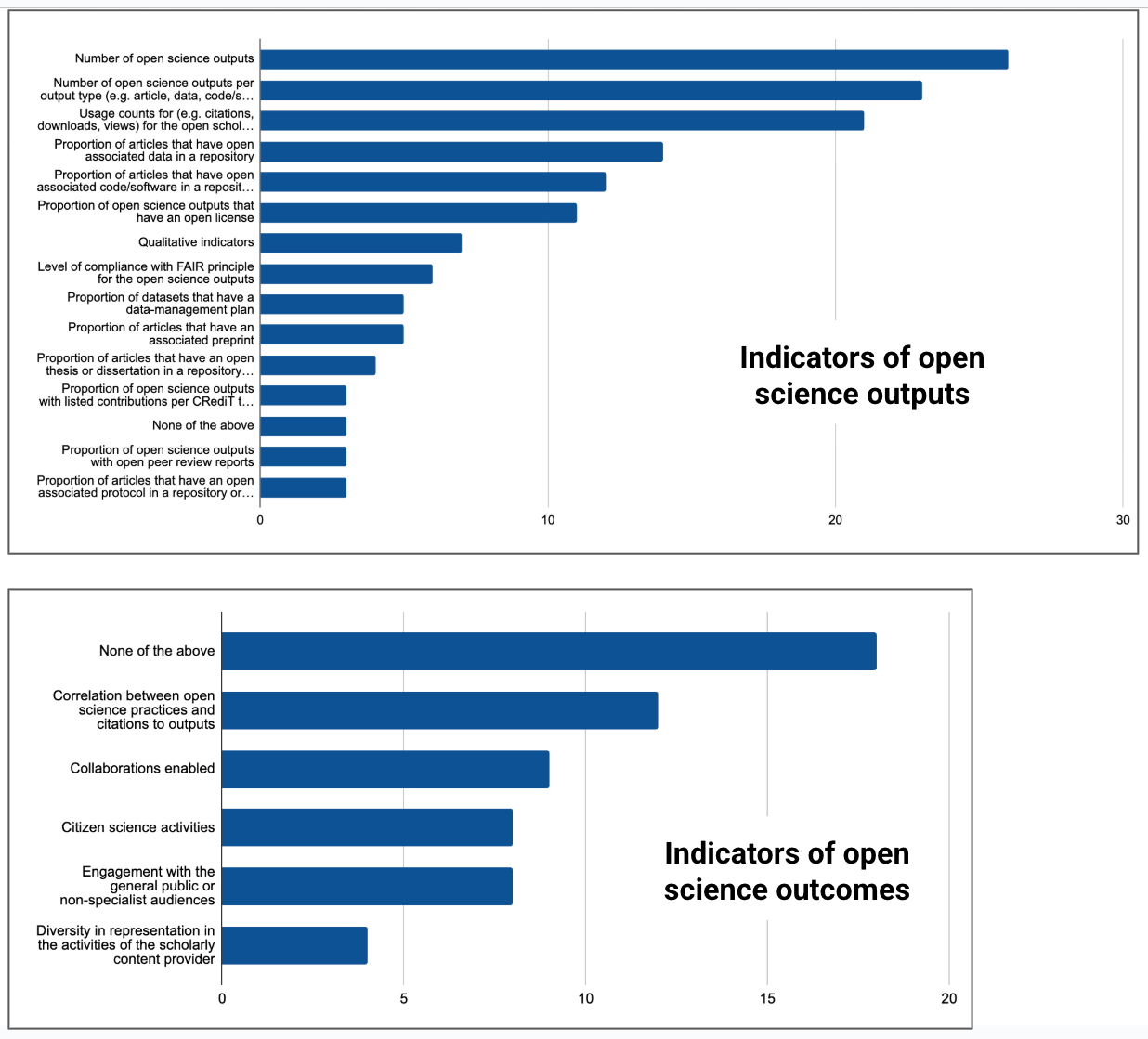

Open output indicators are both common and varied. Organizations measure and segment open outputs by type (articles, datasets, software, etc), track usage counts such as citations, downloads, and views, and record the proportion of articles with associated data and software (Figure 4). Outcome indicators are much less common, with most respondents indicating that they do not monitor outcomes; among those who do, the approaches include examining the correlation between openness and citations, tracking collaborations enabled, recording citizen-science and public-engagement activities, and measuring diversity in the communities involved (Figure 4).

Fig. 4: Indicators of open science outputs and open science outcomes collected

Methodological variation in relation to indicators deserves special attention because it directly affects comparability across content providers. As an example, for the indicator “article with open data”, respondents apply different operational choices. Some accept any form of data presence -from repositories to supporting information-, while others restrict the numerator to repository-hosted datasets. Some determine presence through author declarations in the article, others through automated text-mining; also, some set the denominator to all articles in a journal, others only to those that generate data. The use of AI tools remains uncommon for monitoring purposes, but emerging approaches (again, mostly text-mining of full text to identify associated open objects) are beginning to develop.

While experimentation is to be expected, methodological diversity brings the risk of the resulting indicators not being comparable across platforms. This is an area that the Working Group will pursue to support transparent approaches that enable interpretation of indicators across content providers.

How monitoring gets done in practice

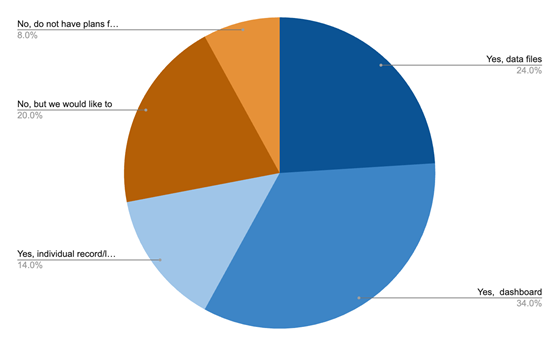

Annual reporting predominates, a cadence that supports year-to-year comparability but can limit more dynamic updates or course-correction. The data sources employed for this monitoring are varied and heavily reliant on persistent identifiers and their metadata. Data sources that respondents most commonly mentioned were Crossref, DataCite, OpenAlex, Web of Science, Scopus, and CRIS systems. Transparency in relation to the sharing of open science monitoring information also varies (Figure 5): 34% of respondents share their indicators via public dashboards, 24% provide downloadable data files, 20% do not yet share but would like to, and 8% have no plans to share. Resourcing estimates bifurcate and cluster around “0.5 full-time equivalent (FTE) or less” and “1 FTE or more” dedicated to open science monitoring, possibly reflecting the different extent of monitoring and the resourcing available for content providers operating in different contexts.

Fig. 5: Percentage share, by modality, of open science monitoring indicators collected by respondents

What ‘newcomers’ say they need

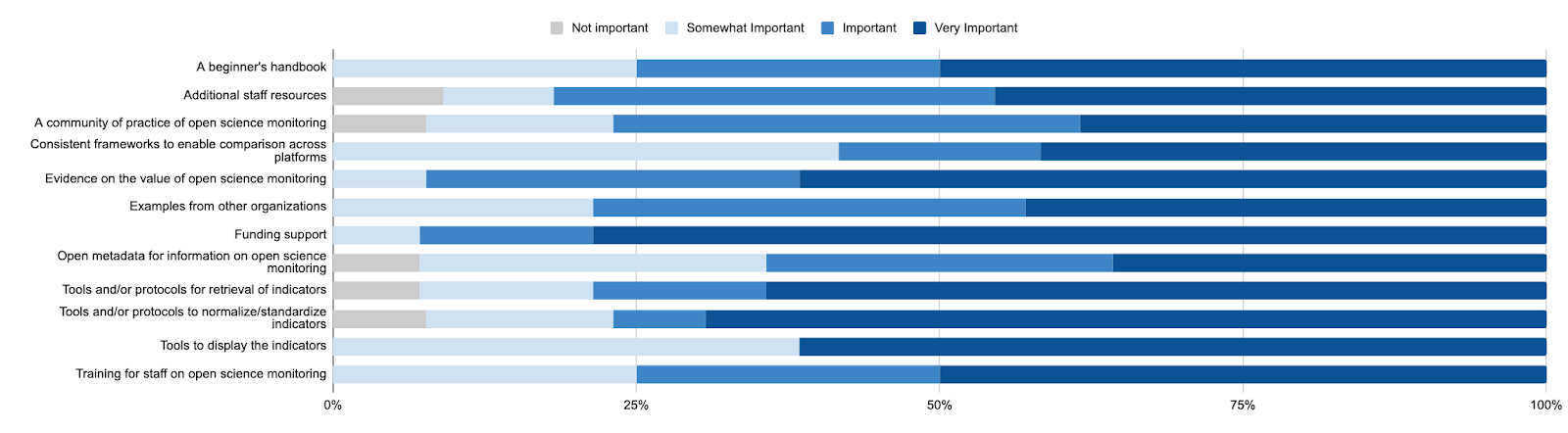

For scholarly content providers not yet undertaking open science monitoring, the hierarchy of needs is remarkably consistent. Funding is the primary enabler to initiate an effort and is most frequently rated as very important. Close behind are capacity measures (staff training, a beginner’s handbook, and additional staff) signalling that know-how and people are immediate requirements. Standards and data plumbing rank highly as well, with calls for consistent frameworks, open and PID-rich metadata, and normalization and retrieval protocols that make monitoring feasible and comparable (Figure 6).

Fig. 6: Enablers of open science monitoring for those who aren’t monitoring yet

The issue is prioritization, not disagreement. A credible playbook for the next year is to allocate funds to monitoring activities; to provide resources and training explicitly related to monitoring; to standardize indicators; to expand the use of open metadata and PIDs; and to have access to evidence and case studies that showcase the benefits of open science monitoring to internal decision-makers.

Advancing open science monitoring with scholarly content providers

These findings provide a clear path to action for both scholarly content providers and OSMI. Scholarly content providers can share more of the indicators they are collecting, along with their methodology and data sources. They can adopt a broader set of output indicators while building a pipeline for outcome reporting. The survey results highlight interest in greater community alignment on indicators for open science, to anchor comparability across platforms without constraining innovation at the edges. They also signal support for open metadata and transparency. These areas align to efforts in the broader research ecosystem to support the use of PIDs and open metadata, which are also seeing endorsement through policies by funders and policymakers.

The OSMI Working Group will move ahead with addressing these key areas: articulate the benefits of open science monitoring, develop guidance for its practical implementation, and recommendations for indicators with accompanying considerations.

Authors: Iratxe Puebla (https://orcid.org/0000-0003-1258-0746) & Eleonora Colangelo (https://orcid.org/0009-0006-5741-1590)

Copyright © 2025 Iratxe Puebla, Eleonora Colangelo. Distributed under the terms of the Creative Commons Attribution 4.0 License.