Several weeks ago, Cameron Neylon kicked off a good idea to list a few articles he was keen to read and tell us why.

Based on 'open tabs' in their browser, approximately every month one person will share their reading wishlists so we can curate some of the 'must-read' posts of the moment.

Each author concludes by tagging the next; to next take up the mantle, Cameron nominated Aaron Tay, Analytics Manager at Singapore Management University Libraries, and somewhat of an industry analyst on his personal blog, Musings About Librarianship.

Here's what Aaron is excited about reading...

I tend to find interesting articles via Twitter or from following references of such articles. For articles that look potentially interesting I will usually put the link in my Google Keep and tag them with “Professional Development”, which I try to clear every week.

I’ve been skimming some of these tagged links recently and these are some that I have found really intriguing and have found earned a place in my browser tabs because I can’t bear to close them or I am still thinking about the implications of what I read.

Habermann, T. (2022, March 7). Metadata Life Cycle: Mountain or Superhighway? [Blog]. Metadata Game Changers.

https://metadatagamechangers.com/blog/2022/3/7/ivfrlw6naf7am3bvord8pldtuyqn4r

I have been slowly working my way through Ted Habermann’s blog posts which often cover parts of his published research that I am excited to read, but this one blog is the one that really made me sit up and take notice.

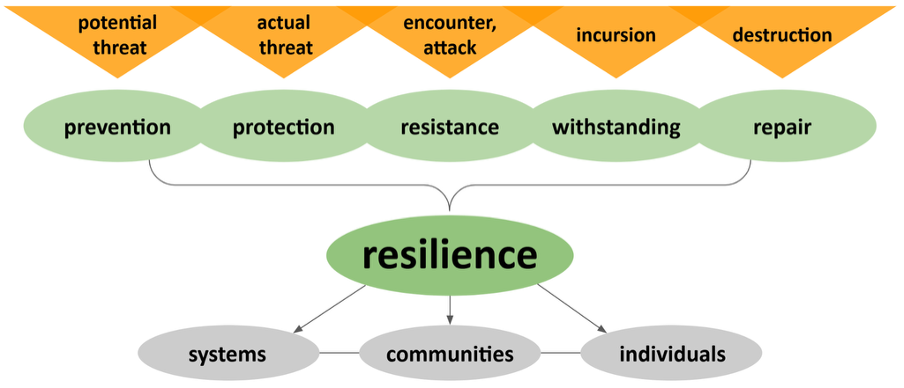

Habermann shows that most data repositories are minting dois with a minimal set of metadata deposited in Datacite needed to get Datacite DOIs. The fact that there isn’t much metadata here is perhaps only somewhat surprising, as I know many repository managers are of the view that making too many fields mandatory will turn off users from depositing.

More astonishing to me is his finding that institutional repositories do indeed have substantial additional metadata entered by the researcher when depositing or enhanced by other means but these metadata simply do not make the climb up what he calls the “Metadata Mountain” into DataCite as they fall by the wayside.

Using DRUM Repository at the University of Minnesota as an example, he identified many metadata fields that had data collected by the repository but were not included or translated into the DataCite Schema. These included fields such as abstract, keywords, Funder, etc.

Of course, all these fields affect FAIR (Findability, accessibility, interoperability and reusability) of the datasets. By working to extract such data from the records and updating the Datacite metadata he managed to increase the completeness of these fields from 15% to 32%.

There’s a lot more to his work, eg his approach to quantifying and visualizing FAIRness by mapping Datacite fields into 4 categories into a Radar Chart , and I intend to follow up on Ted’s recent papers but I’m struck by the practical implications here for repository managers for institutions. Why are we collecting metadata but not exposing it properly?

This is particularly so when the blog post suggests this issue isn’t present as much in subject repositories such as Zenodo, Dryad, Dataverse.

On reflection, this issue of repositories capturing data and making it appear only in human-readable format or at least formats that are not harvested by relevant machines is not a new problem.

I have encountered similar issues in the past with our own repository. In one past incident, the repository manager had painstakingly added version information to each deposited paper to the repository but despite that it was not picked up by the Unpaywall crawler. Another related incident involved trying to figure out why data citations from datasets in our data repository were not appearing via Scholix despite us supposedly providing the information when creating the metadata at the time of the deposit.

Perhaps we have a tendency to assume if we as humans can see the metadata in the repository in human displays we assume our job is done.

Porter, S. J. (2022). Measuring research information citizenship across orcid practice. Frontiers in Research Metrics and Analytics, 7, 779097.

https://doi.org/10.3389/frma.2022.779097

More metadata fun! This time a study on ORCID. I was recently asked about Singapore efforts on PID strategy. While I know very little about that, it did set me thinking. What was the ORCID penetration rate in Singapore and in my institution? How did we compare to others?

This is where this paper is timely. To determine ORCID use by authors, one needs a benchmark or gold standard to compare against and this is where Dimensions comes in.

I intend to look closer at the methodology, but the essential point here is that this study measures ORCID use in two dimensions.

Firstly there is what is called ORCID adoption defined as

“the percentage of researchers in a given year who have at least one publication with a DOI linked to their ORCID iD either in ORCID directly or identified within the Crossref file”

However, a researcher might create a ORCID because he is required to do so when submitting to journals or doing fund proposal by funder and otherwise ignore the ORCID profile. As such the study measures also completeness of the ORCID profile which they argue measures adoption.

The paper is particularly interesting since they provide evidence on adoption and engagement using these metrics across

- Countries

- Research Categories

- Funders

- Publishers

The author of the page even indulged me when I asked for a breakdown of Singapore institutions!

All in all the paper has a lot of interesting findings and implications that are worth looking at. Some are even on the surface surprising. For example they find Humanities research tends to have lower adoption but higher engagement than say Medical and Health Sciences.

Part of this can be explained by the finding that while most publishers do accept ORCIDs, many are still accepting only ORCID for just one author. As Humanities research tends to have fewer authors per paper than medical research, this partly explains why on average humanities researchers with ORCID profiles tend to be more complete.

Bender, E. (2022, April 18). On NYT Magazine on AI: Resist the Urge to be Impressed [Medium]. Emily M. Bender.

I’ve been reading about and playing with language models like GPT-3 for the last 2 years. More recently tools like Elicit.org are starting to employ language models for academic research and have set out their roadmaps here in Elicit: Language Models as Research Assistants.

The results for Elict.org are quite impressive given it is a very early stage tool but what pitfalls are there? Are we too eager to employ such technologies? Can they really be used to support systematic reviews etc? I know one of the problems of language models is that it can make up answers or “hallucinate” but is that the only issue? See also - How to use Elicit responsibly.

I know there is a fairly recent technical paper - On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? that heavily critiques language models and provides a overview of risks of using language models, that is making the rounds, but I haven’t had the time to look at it, and I fear it may be overly technical for me.

This is where I came across the piece entitled “On NYT Magazine on AI: Resist the Urge to be Impressed”. Given this post is by Emily Bender who is one of the co-authors of the Stochastic Parrot piece , I suspect this will provide the viewpoint from an expert who is very skeptical and critical of language models.

This medium post itself is a response to the NYT piece “A.I. Is Mastering Language. Should We Trust What It Says?” which is worth a read.

Domain Repositories Enriching the Global Research Infrastructure. (2022, April 20). [Youtube]. Environmental Data Initiative.

I’m going to cheat by ending with yet another reference to Ted Habermann, this is a talk he gave recently that covers a good portion of his recent work.

It’s a fascinating talk with many ideas including

- “Identifier spreading” - the idea of enhancing repository records with identifiers by using reasonable assumptions. Eg, a Dataset record might not have ORCIDs in data repository, but the Dataset record might be referenced by a journal article which itself has ORCIDs, so one can infer that the Datasets should have the same ORCIDs. You can do the same with affiliations/ROR

- How difficult is it to map affiliations for repositories to ROR? Many institutional repositories will have an easier time because they only need a small number of affiliations. A few general and subject repositories like Dryad/Zenodo will have much difficult tasks

- How do you measure completeness of identifiers/connectivity? Ted proposes a metric and a visualization. He also talks about measuring FAIRness and visualization of Datacite metadata completeness as mentioned earlier.

Tune in next month to find out some more must-read 'open tabs'. I’m tagging Bianca Kramer (scholarly communication/open science librarian at Utrecht University Library,) someone who has been a force in Scholarly Communication and Open Science circles and an academic librarian who I highly respect.

Copyright © 2022 Aaron Tay. Distributed under the terms of the Creative Commons Attribution 4.0 License.