Although advances in artificial intelligence (AI)1 have been unfolding for over decades, the progress in the last six months has come faster than anyone expected. The public release of ChatGPT in November 2022, in particular, has opened up new possibilities and heightened awareness of AI's potential role in various aspects of our work and life.

It follows that in the context of the publishing industry, AI also holds the promise of transforming multiple facets of the publishing process2. In this blog post, we begin the development of a rough taxonomy for understanding how and where AI can and/or should play a role in a publisher’s workflow.

We intend to iterate on this taxonomy (for now, we will use the working title ‘Scholarly AI Taxonomy’).

Scholarly AI Taxonomy

To kickstart discussions on AI's potential impact on publishing workflows, we present our initial categorization of the "Scholarly AI Taxonomy." This taxonomy outlines seven key roles that AI could potentially play in a scholarly publishing workflow:

- Extract: Identify and isolate specific entities or data points within the content.

- Validate: Verify the accuracy and reliability of the information.

- Generate: Produce new content or ideas, such as text or images.

- Analyse: Examine patterns, relationships, or trends within the information.

- Reformat: Modify and adjust information to fit specific formats or presentation styles.

- Discover: Search for and locate relevant information or connections.

- Translate: Convert information from one language or form to another.

The above is the first pass at a taxonomy. To flesh out these further, we have provided examples to illustrate each category further.

We thoroughly recognise that some of the examples below, when further examined, may be miscategorized. Further, we recognise that some examples could be illustrations of several of these categories at play at once and don’t sit easily within just one of the items listed. We also acknowledge that the categories themselves will need thorough discussion and revision going forward. However, we hope that this initial taxonomy can play a role in helping the community understand what AI could mean for publishing processes.

Also note, in the examples we are not making any assertions about the accuracy of AI when performing these tasks. There are a lot of discussions already on whether the current state of AI tools can do the following activities well. We are not debating that aspect of the community discussion; that is for publishers and technologists to explore further as the technology progresses and as we all gain experience using these tools.

These categories are only proposed as a way of understanding the types of contributions AI tools can make. That being said, some of the below examples are more provocative than others in an attempt to help the reader examine what they think and feel about these possibilities.

Initial categorization

Our initial seven categories are detailed further below.

1. Extract - Identify and isolate specific entities or data points within the content

In the extraction stage, AI-powered tools can significantly streamline the process of identifying and extracting relevant information from content and datasets. However, an over-reliance on AI for this task can lead to errors if the models are not well-tuned or lack the necessary context to identify entities accurately. Some speculative examples:

- Identifying author names and affiliations from a submitted manuscript to pre-fill forms and save time during submission while increasing the accuracy of the input.

- Extracting key terms and phrases for indexing purposes.

- Isolating figures and tables from a research article for separate processing.

- Extracting metadata, such as title, abstract, and keywords, from a document.

- Identifying citations within a text for reference management.

2. Validate - Verify the accuracy and reliability of the information

AI-based systems can validate information by cross-referencing data against reliable sources or expected structures, ensuring content conformity, accuracy and/or credibility. While this can reduce human error, it is essential to maintain a level of human oversight, as AI models may not always detect nuances in language or identify reliable sources. Some examples:

- Cross-referencing citations to ensure accuracy and proper formatting.

- Verifying author affiliations against an established database.

- Ensuring proper image attribution and permissions.

- Checking factual information in an article against trusted sources.

- Validating claims made in a scientific paper against previous studies.

3. Generate - Produce new content or ideas, such as text or images

AI can create high-quality text and images, saving time and effort for authors and editors. However, the content generated by AI may contain factual inaccuracies, lack creativity, or inadvertently reproduce biases present in the training data, necessitating human intervention to ensure accuracy, quality, originality, and adherence to ethical guidelines. Some examples:

- Generating social media content (e.g., summarising longer text to a tweetable length) or promotional content for a new publication.

- Creating keyword lists for search engine optimization (SEO).

- Automatically generating an abstract or summary of a manuscript, particularly a plain language summary pitched at a certain audience.

- Creating a list of suggested article titles based on the content and target audience.

- Producing visually engaging charts or graphs from raw data.

4. Analyse - Examine patterns, relationships, or trends within the information

AI-driven data analytics tools can help publishers extract valuable insights from their content, identifying patterns and trends to optimize content strategy. While AI can provide essential information, over-reliance on AI analytics may lead to overlooking important context or misinterpreting data, requiring human analysts to interpret findings accurately. Some examples:

- Analyse an image to create accessible text descriptions.

- Determining the sentiment of reviews.

- Identifying trending topics in a specific field to guide editorial direction.

- Analyzing the readability level of a manuscript.

- Discovering patterns in citation networks to identify influential articles and authors.

5. Reformat - Modify and adjust information to fit specific formats or presentation styles

AI can reformat content for specific media channels or alternative structures, enhancing user experience and accessibility. However, AI-generated formatting may not always be ideal or adhere to specific style guidelines, requiring human editors to fine-tune the formatting. Some examples:

- Formatting content to comply with a specific style guide.

- Adapting a long-form article for a shorter, mobile-friendly version.

- Converting a manuscript into XML or converting datasets to open formats.

- Rearranging content to fit different print and digital formats.

- Adjusting images and graphics for optimal display across various devices.

6. Discover - Search for and locate relevant information or connections

AI can efficiently find and link information about a subject, streamlining the research process. However, AI-driven information discovery may yield irrelevant, incorrect, or outdated results, necessitating human verification and filtering to ensure accuracy and usefulness. Some examples:

- Finding relevant articles within a publisher’s corpus to recommend for further reading.

- Identifying potential reviewers for a submitted manuscript based on their expertise.

- Discovering trending topics for a call for papers.

- Locating similar works to provide context for a piece of content.

- Searching for related images or multimedia to accompany a text.

7. Translate - Convert information from one language or form to another

AI can quickly translate languages and sentiments, making content more accessible and understandable to diverse audiences. However, AI translations can sometimes be inaccurate or lose nuances in meaning, especially when dealing with idiomatic expressions or cultural context, necessitating the involvement of human translators for sensitive or complex content. Some examples:

- Translating a research article or book into another language.

- Converting scientific jargon into more accessible language for a popular science article.

- Adapting a text's cultural references to be more understandable for a global readership.

- Translating the sentiment of a text.

- Converting spoken language into written transcripts (or vice versa) for interviews or podcasts.

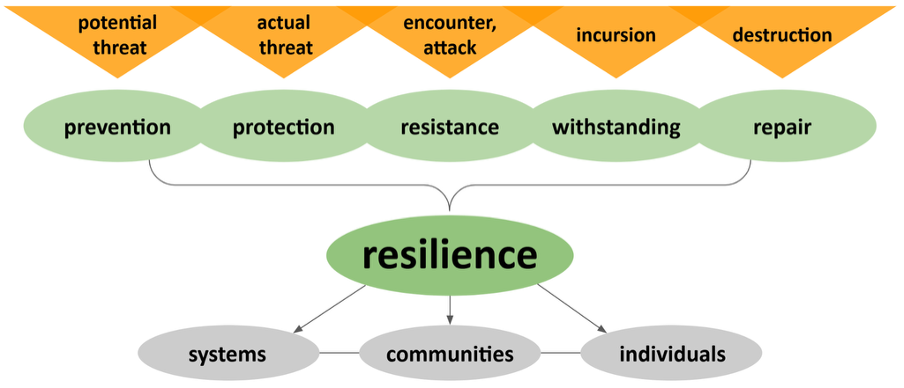

Balancing AI and Human Intervention in Publishing Workflows

There is potential for AI to benefit publishing workflows. Still, it's crucial to identify where AI should play a role and when human intervention is required to check and validate outcomes of assisted technology. In many ways, this is no different to how publishing works today. If there is one thing publishers do well, and sometimes to exaggerated fidelity, it is quality assurance.

However, AI tools offer several new dimensions which can bring machine assistance into many more parts of the process at a much larger scale. This, together with the feeling we have that AI is, in fact, in some ways ‘doing work previously considered to be the sole realm of the sentient’ and the need for people and AI machines to ‘learn together’ so those outcomes can improve, means there is both factual and emotional requirements to scope, monitor, and check these outcomes.

Consequently, workflow platforms must be designed with interfaces allowing seamless ‘Human QA’ at appropriate points in the process. These interfaces should enable publishers to review, edit, and approve AI-generated content or insights, ensuring that the final product meets the required standards and ethical guidelines. Where possible, the ‘Human QA’ should feed back into the AI processes to improve future outcomes; this also needs to be considered by tool builders.

To accommodate this 'Human QA', new types of interfaces will need to be developed in publishing tools. These interfaces should facilitate easy interaction between human users and AI-generated content, allowing for necessary reviews and modifications. For instance, a journal workflow platform might offer a feature where users are asked to 'greenlight' a pre-selected option from a drop-down menu (e.g., institutional affiliation), generated by AI. This way, researchers and editors can quickly validate AI-generated suggestions while providing feedback to improve the AI's performance over time. Integrating such interfaces not only ensures that the content adheres to the desired quality standards and ethical principles but also expedites the publishing process, making it more efficient.

The Speed of Trust

Trust plays a large role in this process. As we learn more about the fidelity and accuracy of these systems and confront what AI processes can and can’t do well to date, we will need to move forward with building AI into workflows 'at the speed of trust.'

Adopting a "speed of trust" approach means being cautious yet open to AI's potential in transforming publishing workflows. It involves engaging in honest conversations about AI's capabilities and addressing concerns, all while striking a balance between innovation and desirable community standards. As we navigate this delicate balance, we create an environment where AI technology can grow and adapt to better serve the publishing community.

For example, as a start, when integrating AI into publishing workflows, we believe it is essential to provide an ‘opt-in’ and transparent approach to AI contributions. Publishers and authors should be informed about the extent of AI involvement and its limitations, and presented with interfaces allowing them to make informed decisions about when and how AI will be used. This transparent ‘opt-in’ approach helps build trust, allows us to iterate forward as we gain more experience, and sets the stage for discussions and practices regarding ethical AI integration in publishing workflows.

Conclusion

The potential of AI in publishing workflows is immense, and we find ourselves at a time when the technology has taken a significant step forward. But it's essential to approach its integration with a balanced perspective. We can harness the power of AI while adhering to ethical standards and delivering high-quality content by considering both the benefits and drawbacks of AI, identifying areas for human intervention, maintaining transparency, and evolving our understanding of AI contributions.

This initial taxonomy outlined in this article can serve as a starting point for understanding how AI can contribute to publishing workflows. By quantifying AI contributions in this way, we can also discuss the ethical boundaries of AI-assisted workflows more clearly and help publishers make informed decisions about AI integration.

By adopting a thoughtful strategy, the combined strengths of AI and human expertise can drive significant advancements and innovation within the publishing industry.

1 It's worth noting that we use the term AI here, but we are actually referring to large language models (LLMs); AI serves as useful shorthand since it's the common term used in our community. As we all gain more experience, being more accurate about how we use terms like AI and LLM will become increasingly important. A Large Language Model (LLM) can be described as a sophisticated text processor. It's an advanced machine learning model designed to process, generate, and understand natural language text.

2 By publishing, we are referring to both traditional journal-focused publishing models as well as emergent publishing models such as preprints, protocols/methods, micropubs, data, etc.

Many thanks to Ben Whitmore, Ryan Dix-Peek, and Nokome Bentley for the discussions that lead to this taxonomy at our recent Coko Summit. This article was written with the assistance of GPT4.

Copyright © 2023 Adam Hyde, John Chodacki, Paul Shannon. Distributed under the terms of the Creative Commons Attribution 4.0 License.